The Importance of High-Quality Datasets in Achieving Machine Learning Success

Introduction:

In the contemporary landscape of artificial intelligence and Dataset For Machine Learning (ML), data has emerged as a critical asset. The effectiveness of an ML model in learning, predicting, and executing tasks is fundamentally dependent on one essential element: the quality of the dataset utilized for training. While sophisticated algorithms and computational resources play a significant role, even the most advanced models will struggle without access to high-quality, reliable data.

Significance of High-Quality Datasets

1. Basis for Learning

Machine learning algorithms derive patterns and relationships from data. If the dataset is flawed, incomplete, or biased, the model's performance will reflect these deficiencies. High-quality datasets provide a strong foundation for learning, resulting in enhanced predictions and outcomes.

2. Reducing Bias

Bias present in datasets can lead to biased models, which may have extensive negative implications. For instance, an ML model trained on unbalanced demographic data may yield unjust results. It is vital to ensure diversity and balance within the data to mitigate bias and promote fairness in ML applications.

3. Enhanced Generalization

High-quality datasets allow models to generalize more effectively, enabling them to perform well on previously unseen data. This capability diminishes the likelihood of overfitting, where a model becomes overly specialized to the training data and struggles to adapt to new situations.

4. Improved Performance Metrics

Performance metrics such as accuracy, precision, recall, and F1 score are contingent upon the quality of the underlying data. A meticulously curated dataset significantly enhances model performance and reliability.

Attributes of a High-Quality Dataset

To develop successful ML systems, it is crucial to recognize the attributes that define a high-quality dataset. Key characteristics include:

- Accuracy: The data should closely represent reality.

- Completeness: Incomplete data can hinder training and result in erroneous predictions.

- Relevance: The data must be applicable to the specific problem being addressed.

- Uniformity: Data must adhere to a consistent structure and format.

- Variety: Incorporating a range of diverse data allows the model to encounter a broad spectrum of scenarios.

- Relevance: Data must be current to maintain its applicability.

Obstacles in Securing High-Quality Datasets

Despite its significance, the acquisition of high-quality datasets frequently presents challenges. Common difficulties include:

- Data Limitations: In certain fields, such as healthcare or rare event forecasting, data availability may be restricted.

- Data Confidentiality and Protection: Ethical and legal factors can limit access to sensitive information.

- Disorganized Data: Data from real-world sources is often unstructured and necessitates considerable preprocessing.

- Annotation Expenses: Labeled data, essential for supervised learning, can incur high costs and require substantial time to produce.

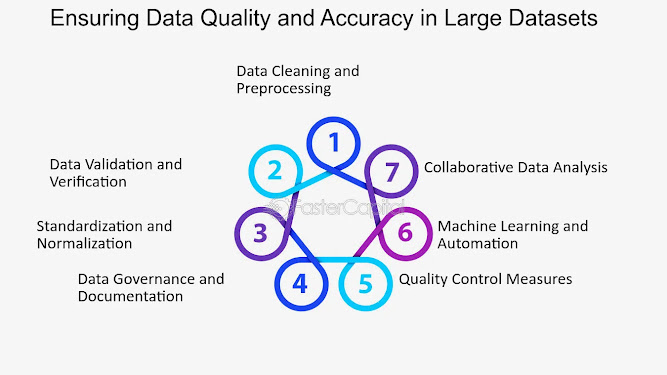

Strategies for Leveraging High-Quality Datasets

To address the challenges associated with data quality, organizations and researchers can implement a variety of strategies:

- Data Augmentation: Create synthetic data to enhance existing real-world datasets.

- Active Learning: Employ models to pinpoint and label the most valuable data points.

- Collaboration: Collaborate with organizations and open data initiatives to gain access to a wide range of datasets.

- Data Cleaning: Allocate resources to tools and processes that identify and rectify errors in raw data.

- Ethical Data Practices: Adhere to guidelines that promote data privacy, fairness, and inclusivity.

Significance of High-Quality Datasets in Real-World Applications

Various industries, including healthcare, finance, and autonomous vehicles, underscore the critical role of high-quality datasets. For instance:

- Healthcare: Predictive models depend on precise and varied medical data for disease diagnosis and treatment recommendations.

- Finance: Fraud detection systems rely on substantial amounts of accurate transaction data.

- Autonomous Vehicles: Safe navigation necessitates diverse datasets that encompass a range of driving conditions and environments.

Conclusion

High-quality datasets serve as the foundation for effective machine learning applications. By committing to thorough data collection, preprocessing, and curation, organizations can realize the full potential of AI and ML technologies. In an era where data-driven decision-making is becoming increasingly prevalent, prioritizing data quality is essential.

At Globose Technology Solutions, our specialists recognize the importance of high-quality datasets and are committed to delivering solutions that enable organizations to utilize their data efficiently. From data preprocessing to the promotion of ethical practices, GTS supports businesses in attaining machine learning success with accuracy and assurance.

Comments

Post a Comment